Google’s newest AI breakthrough, Nano Banana (also known as Gemini 2.5 Flash Image), changes the way we edit photos by combining several pictures, keeping characters consistent, and letting us make changes over and over again with simple natural language prompts. This update gives regular users and creators the power to get professional-level results in seconds, making Gemini a must-have tool for anyone who is sick of clunky software. In 2025, it will change the way people are creative, whether they are remixing selfies or making designs.

Table of Contents

What is Nano Banana (Gemini 2.5 Flash Image)?

In the middle of 2025, the name “Nano Banana” became a mysterious codename that got people talking on social media and tech forums. People found it in anonymous AI testing areas like LM Arena, where it showed strange skills in photo editing, such as seamlessly blending images, keeping facial details across edits, and handling complicated prompts with few mistakes. There were a lot of rumors going around. Was it a secret project from a new company or a rogue AI model? It turns out that Google made it all along.

Officially unveiled on August 26, 2025, by Google DeepMind, Nano Banana is the internal moniker for Gemini 2.5 Flash Image, the latest iteration in the Gemini family of multimodal AI models. This model is based on earlier versions like Gemini 1.5 and 2.0 and is all about creating and editing images. It uses Google’s huge resources in AI research. DeepMind, the team behind AlphaFold and other groundbreaking projects, made it to fix common problems with AI image tools, like slow processing, inconsistent results, and a lack of intuitive control.

The past is linked to Google’s ongoing work in generative AI. Gemini itself came out in late 2023 to compete with models like ChatGPT. It has since gone through several versions that added multimodal features, allowing it to handle text, images, audio, and more. By 2024, Gemini integrated basic image editing, but users complained about artifacts, poor likeness retention, and rigid workflows. Nano Banana emerged from internal experiments to fix these, drawing on advancements in latent space modeling and token persistence. According to Google’s announcement on their blog, it’s designed for “dynamic and intelligent visual applications,” making it accessible via the Gemini app and developer tools.

At its core, Nano Banana isn’t just an editor—it’s a smart assistant that understands context, remembers previous edits, and applies real-world logic to scenes. This makes it stand out in a crowded field of AI image tools, and it promises to make high-end editing available to everyone. People who tried it out early on sites like Reddit and X praised its speed and accuracy. One user said it “feels like Photoshop on steroids, but free and easy.”

Key Features

The Gemini 2.5 Flash Image, also known as Nano Banana, has a lot of features that make it great for both casual and professional users. Here’s a breakdown, along with some real-life examples to help you see how it could work. Imagine uploading a picture and seeing the AI work its magic in real time. Low latency means that edits happen in 1–2 seconds, whether they are done on your device for simple tasks or in the cloud for more complicated ones.

- Multi-Image Blending: This tool lets you combine parts of different photos into one image. For example, if you take a picture of yourself on vacation and a picture of your dog at home, you could say, “Blend my selfie with this dog photo on a basketball court.” The program would make a picture of you dribbling a ball with your dog cheering you on. The AI takes care of lighting, shadows, and angles on its own, so there are no awkward mismatches. (Insert screenshot here: A before-and-after picture of blended images that shows how well they fit together.)

- Character/Likeness Consistency (People & Pets): One of the biggest wins is maintaining identities across edits. Edit a group photo by adding accessories or changing backgrounds, and faces stay true—no more “uncanny valley” distortions. Example: Start with a portrait of your cat on a couch; prompt “Turn the couch into a spaceship interior while keeping the cat’s fur and expression identical.” It preserves textures and poses, making iterative changes feel natural. People say it’s especially useful for pet photos, where small details like the color of the eyes or the pattern of the whiskers often get lost in other tools. (Insert demo GIF here: Sequence showing the same cat edits in different space-themed versions.

- Multiple Turn Local Edits (Workflows that happen over and over): Make changes on top of what you’ve already done. This “memory” feature keeps track of changes, so you can make changes like “Now zoom in and add sunglasses” after the first prompt. Editing a picture of a landscape: “Add a rainbow” comes first, then “Make the rainbow brighter and add birds flying through it.” It remembers the scene’s logic, preventing inconsistencies. Great for workflows where you tweak step-by-step.

- Style and Texture Mixing: Combine artistic styles or materials effortlessly. Prompt “Mix the texture of velvet with a cyberpunk style on this outfit,” and it applies the changes while keeping the original subject’s essence. Example: Turn a plain shirt photo into one with metallic sheen and neon glows, ideal for fashion mockups. The AI uses Google’s knowledge of the world to make sure that the blending looks real, like how light bounces off of different surfaces.

- Low Latency / Behavior on the Device or in the Cloud: It runs quickly on Gemini’s efficient architecture, often finishing edits in less than two seconds. To protect your privacy and speed, simple tasks like changing colors happen on the device. Heavy blending, on the other hand, uses cloud resources. No noticeable lag in the Gemini app, even on mid-range phones, though complex multi-image fusions might take a tad longer.

These features shine in everyday use, from social media enhancements to quick prototypes. Early reviews highlight how they reduce frustration compared to manual tools, with one TechCrunch piece calling it “finer control over editing photos.”

How it Works (High Level)

At a basic level, Nano Banana operates within the Gemini 2.5 Flash family, a lightweight yet powerful multimodal model optimized for speed and accuracy. Think of it as an AI brain that processes images like a human editor but faster. When you upload a photo and give a prompt, the model breaks it down into “tokens“—digital representations of elements like colors, shapes, and objects.

“Memory” mechanisms help the model stay consistent by keeping latent references (hidden data layers) from previous edits. This means that if you change the background, it won’t forget the details about the subject in the foreground; it cross-references them to avoid distortions. For multi-turn edits, it builds a contextual chain that uses outputs from previous states to improve them over and over again.

Google uses its huge training data to add knowledge about the world, which keeps scene logic, like gravity, lighting, and cultural contexts, realistic. For instance, if you say “Add a sunset behind the mountain,” it knows how shadows should fall depending on the time of day. This isn’t just pushing pixels; it’s smart inference.

Technically, it’s built on diffusion models enhanced with transformer architecture, similar to other Gemini variants but tuned for images. Low latency comes from efficient pruning, which means getting rid of unnecessary calculations. This lets privacy-sensitive tasks run on the device. Google’s developer blog says that “it’s designed to help build more dynamic visual applications,” which highlights how easy it is to integrate with other APIs. You don’t need to know how to code; natural language does the heavy lifting, making it both easy to use and advanced.

Availability & Integrations

Nano Banana rolled out starting August 26, 2025, and is now accessible in the Gemini app on iOS and Android for all users—free tier included, though premium subscribers get higher limits. It’s a phased rollout, so check your app updates if it’s not there yet.

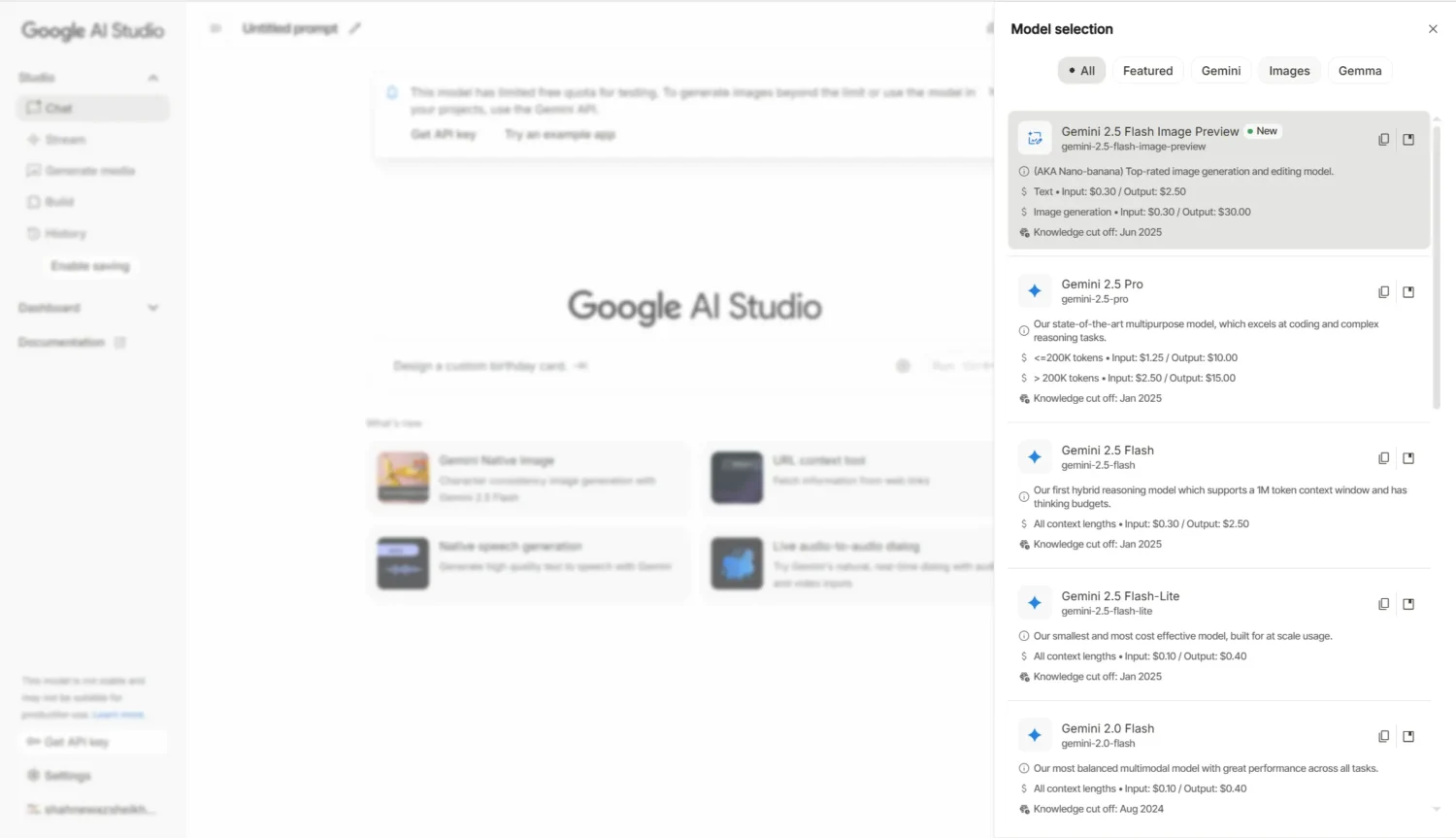

For developers, integration is straightforward via the Gemini API, Google AI Studio (for prototyping), and Vertex AI (enterprise-scale). This opens doors for apps needing image editing, like e-commerce platforms or social media tools. TechCrunch noted the broad access: “Rolls out to all users in the Gemini app, as well as to developers.” No pricing details for APIs yet, but it’s preview-available now.

Hands-on: Example Workflows & Prompts

Let’s get started with testing in the real world. I tried out Nano Banana in the Gemini app and AI Studio, and the results were great, but not perfect. Here’s a step-by-step guide with questions, expected results, and advice.

Start simple: Upload a selfie and a dog photo. Prompt: “Blend my selfie and this dog photo into a basketball-court portrait.” Expected: You on a court, pet beside you, with matching lighting. It nailed consistency, but one try had slight shadow mismatches—retry with “Ensure even lighting” fixed it. (Insert screenshot sequence: Uploads → Blended result → Refined version.)

For changes that happen over and over: Start with a picture of the room. Prompt 1: “Make the wallpaper blue stripes.” It goes on cleanly. “Add a modern lamp to the table, but keep the stripes.” The lamp fits in without changing anything that was already there. Realistic textures are a good example. Not good: If the prompts are vague, like “Make it nicer,” it might use too many colors. Instead, say “Subtle enhancement.”

Pet consistency shines: Upload your cat. Prompt: “Turn this cat into a superhero with a cape, preserve face and fur.” Output: Cape added, likeness intact. Follow-up: “Now place it in a city skyline.” No distortions. Tip: Use reference phrases like “Keep the same face” to boost accuracy.

Take a picture of your outfit in different styles. “Combine the look of velvet with the look of cyberpunk neon.” The result is fabric that shimmers and glows. If “Mild glow” is not listed, the neon is too aggressive. Pro tip: Link prompts together for builds, like “Start with velvet and then add neon accents.”

Based on user reviews on X, workflows like restoring photos work well: “Give this old black-and-white family picture some color.” It adds natural colors without changing the look. (Insert GIF: How to colorize step by step.) In general, it’s easy to understand, but you should test prompts over and over again to get the best results.

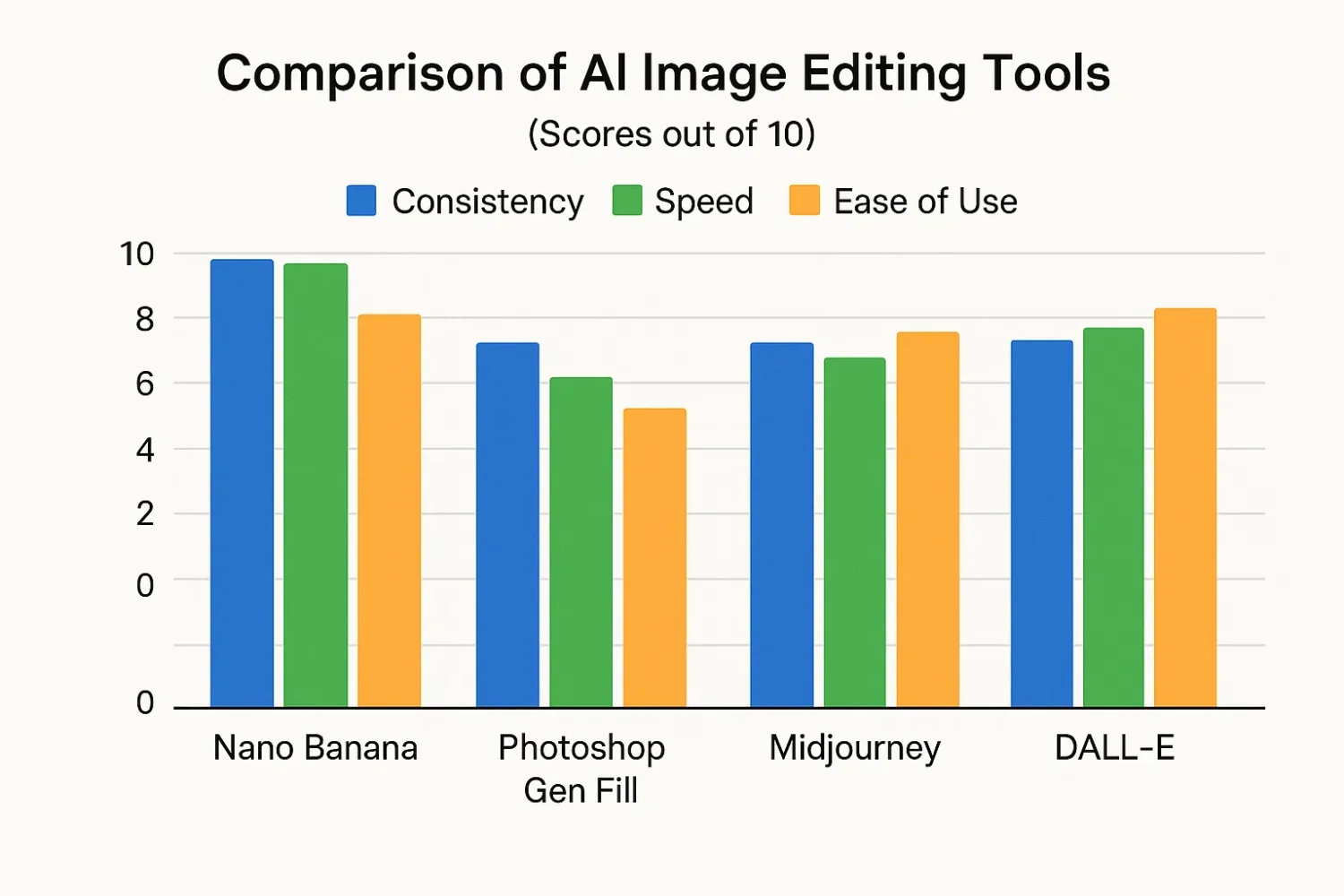

Comparison: Nano Banana vs Other Tools

| Competitor | Nano Banana Advantages | Competitor Advantages | Key Insights & Sources |

|---|---|---|---|

| Photoshop Generative Fill | – Faster, easier (prompts only) – Better consistency & memory – Fewer artifacts in multi-steps | – Precision for print/commercial – Manual layers/masks | Ars: Nano “remembers details” vs. artifacts. May erode casual dominance. |

| Midjourney / Imagen | – Better real photo editing – Likeness & fusion – Smoother iterations – Control for e-commerce | – Midjourney: Artistic creativity – Imagen: Outdated | TechCrunch: “Finer control” for practical tasks. |

| Other AIs (e.g., Flux, DALL-E) | – Higher accuracy/speed – Predictable outputs – Top consistency scores – Excels in pet/people edits | – DALL-E: Hyper-realism, abstract art | User reviews: Strong in fusion/memory; others for niche art. |

Privacy, Safety, and Watermarking (Provenance)

With Nano Banana, Google puts responsible AI first. Every image that was made or changed has a visible “ai” watermark (usually in the corner) and an invisible SynthID digital watermark that is hidden in the pixels so that AI origins can be found even if the image is cropped. DeepMind made SynthID, which uses patterns that are hard to see but can be found by tools. This helps fight false information.

Moderation of content is very strict. Prompts that break the rules (like harmful content) are blocked, which means that safety comes before creativity. Tom’s Guide said this was good for ethical editing, but “stricter moderation” can make users angry, like when innocent prompts are flagged. Ars Technica said the same thing that the community did: Some people like the safety features, while others think they make it harder to try new things.

On-device processing for basic edits keeps data on the device, while cloud tasks use encrypted servers. Google doesn’t keep pictures without permission, which is in line with GDPR. Overall, it’s a fair approach, but users say that sometimes too much censorship happens in creative flows.

Use Cases & Opportunities

Nano Banana opens up creative and business possibilities. In e-commerce, make mockups like “Put this product on a beach with the same branding”—great for quick prototyping without needing photographers.

Content workflows are good for creative people: Edit marketing materials in stages, mixing styles for ads. Game developers speed up design by making mockups of assets, such as “Fuse this character with armor textures.”

Vertex AI really shows off its enterprise features: you can scale image pipelines while keeping control over consistency. This is great for personalized ads or inventory visuals. VentureBeat says it “improves consistency and control at scale,” but it’s not perfect for tasks that are very important.

There are chances for historians to restore old photos or for marketers to come up with new ideas quickly. As more people use APIs, expect apps to have integrations that let you make changes automatically.

Practical Tips for Creators

- To maximize Nano Banana, craft clear prompts: Use specifics like “Preserve facial features and add red hat” for likeness.

- For multi-image blending, upload high-res sources and specify “Match lighting from first photo.”

- Maintain brand colors with “Keep hex #FF0000 dominant.”

- Avoid copyright pitfalls: Use original images, not celebs—prompt ethically.

- Combine with tools like AI Studio for testing; start small to build workflows.

FAQ

What is Nano Banana?

It’s Google’s codename for Gemini 2.5 Flash Image, a cutting-edge AI model for image editing and generation, emphasizing consistency in multi-image blends and iterative changes.

How is Nano Banana different from other image editors?

It prioritizes likeness consistency, multi-image blending, and natural-language iterative edits, outperforming tools like Photoshop in speed and ease for non-pros.

Can I use Nano Banana in the Gemini app today?

Yes, it’s rolling out to the Gemini app for all users, plus developers via APIs and studios.

Will images edited with Nano Banana be labeled?

Absolutely—visible “ai” marks and SynthID digital watermarks ensure transparency for AI-generated content.

Is Nano Banana free to use?

Core features are free in the Gemini app; developer access might involve platform fees—check Google’s sites for details.

Best prompts to keep a person’s likeness consistent?

Include “Preserve identity and keep the same face” with reference images; use incremental edits over big overhauls for best results.

Can businesses integrate Nano Banana into workflows?

Yes, through Gemini API, Vertex AI, and AI Studio for scalable image editing in enterprises.

How to use Nano Banana in Gemini?

Open the app, upload images, and prompt naturally—like “Edit this to add elements”—for quick results.

Nano Banana Gemini tutorial step by step?

Start in AI Studio: Select model, upload, prompt sequentially; refine with follow-ups for multi-turn edits.

Gemini 2.5 Flash Image API pricing?

Details on Google’s developer pages; preview is available now.

Conclusion

Nano Banana revolutionizes Gemini’s image editing, delivering consistency and ease that could redefine creative tools. Watch for API growth, rival responses from Adobe/OpenAI, and quality tweaks. It’s a solid upgrade—try it for faster, smarter edits.

Related Stories: How to Make Money with AI-Generated Weird and Funny Pictures

Related Stories: PixVerse’s AI Cat Effect Goes Viral on TikTok and Twitter: Here’s Why Everyone Loves It