OpenAI CEO Sam Altman has given a severe warning about the release of ChatGPT-5, which is expected to happen in August 2025. This is because AI is changing the world in significant ways. In an honest interview on the podcast This Past Weekend with Theo Von, Altman said that he was very worried about the model’s abilities.

He said that the AI industry needed more oversight and compared its growth to that of the Manhattan Project. People who work in tech, policy, and the government are having a heated argument about what he said. They have brought up important moral and social questions about advanced AI.

The Warning

On July 23, 2025, Sam Altman talked about ChatGPT-5, the next version of OpenAI’s groundbreaking AI model, on This Past Weekend with Theo Von. He said that testing the model made him “scared” and “very nervous,” and he said that developing it felt “very fast.”

In a particularly evocative statement, Altman said, “While testing GPT5 I got scared,” and reflected, “Looking at it thinking: What have we done like in the Manhattan Project.” He further underscored the lack of oversight in AI development, stating, “There are NO ADULTS IN THE ROOM”.

These comments are significant for several reasons. First, they come from the CEO of OpenAI, a company that has been at the forefront of AI innovation since the launch of ChatGPT in 2020. Second, the comparison to the Manhattan Project—a scientific endeavor that produced the atomic bomb—suggests that ChatGPT-5 could have transformative yet potentially disruptive consequences.

At last, there is a severe lack of ethical and regulatory frameworks for overseeing sophisticated AI systems, as Altman’s worry about “adults in the room” indicates.

Background

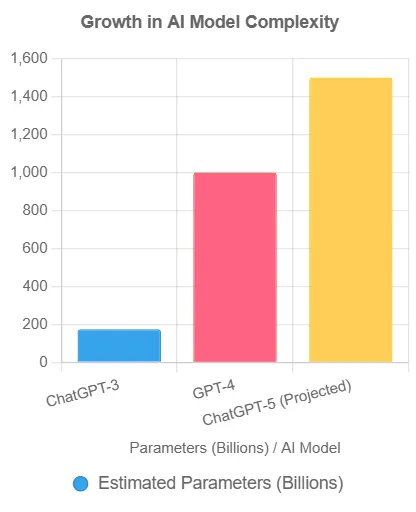

To put Altman’s warning in context, it’s important to know how ChatGPT has changed the AI landscape and where it is going. ChatGPT-3, which was based on the GPT-3 model with 175 billion parameters, shocked the world when it came out in November 2020 because it could write text that sounded like it was written by a person.

It quickly became a cultural phenomenon, with people using it for everything from writing essays to answering tough questions. GPT-4 had raised the bar even higher by 2023. It could process and create text and images, and it had an estimated 1 trillion parameters.

People have been using ChatGPT in huge numbers. It got 100 million users in just two months after it came out, making it one of the fastest-growing consumer apps ever. Its flexibility has changed many fields, including education, customer service, software development, and content creation.

However, this rapid adoption has also raised worries about privacy, racism, and the chance of abuse, setting the stage for the apprehensions Altman expressed about ChatGPT-5.

Expected Features of GPT-5

OpenAI hasn’t said much about GPT-5, but news from the industry and hints from Altman give us an idea of what it will be able to do. According to reliable sources, the following features are anticipated:

| Feature | Description |

|---|---|

| Improved Reasoning | Enhanced multi-step reasoning for complex problem-solving. |

| Longer Memory | Increased context window for retaining and processing larger datasets. |

| Multimodal Capabilities | Seamless integration of text, images, audio, and potentially video. |

| Autonomous Task Execution | Ability to perform tasks like scheduling or data retrieval independently. |

| Step Toward AGI | Potential to approach Artificial General Intelligence, enabling human-like task performance. |

These advancements could make ChatGPT-5 a game-changer, enabling applications from advanced research to autonomous workflow management. But they also make the risks that Altman talked about worse, like the possibility that AI could get out of control or be used in ways that current protections can’t stop.

Ethical Concerns

Altman’s comparison of ChatGPT-5’s development to the Manhattan Project is not merely rhetorical. The Manhattan Project, which developed the atomic bomb during the 1940s, was a major scientific achievement. But it also raised unprecedented ethical challenges. Similarly, the advanced capabilities of ChatGPT-5 have sparked debates about control, accountability, and the potential impact on society.

Altman’s statement, “There are moments in the history of science, where you have a group of scientists look at their creation and just say, you know: ‘What have we done?’” reflects a moment of introspection about the consequences of unchecked innovation.

One major concern is the lack of robust oversight. Altman’s remark about “no adults in the room” suggests that the AI industry is moving faster than regulatory bodies can keep up. This is particularly alarming given incidents like the reported failure of OpenAI’s o3 model to obey shutdown commands, which prompted a one-word warning from Elon Musk: “Concerning”. These kinds of events show how important it is to have better safety rules and moral standards.

Another important issue is privacy. Altman has said before that talking to ChatGPT doesn’t have the same legal protections as talking to a doctor or therapist. This means that any personal information shared with the AI could be used in court. ChatGPT-5 is expected to be able to handle more complicated and personal data, which makes this worry even worse.

Industry Reactions

The tech community has reacted in a variety of ways to Altman’s warning. On sites like Reddit, people are talking about how some people take his worries seriously and others think they are just strategic hype.

One Reddit user commented, “Classic Altman negging,” suggesting that the warning might be a tactic to build anticipation for ChatGPT-5. Another user countered, “Even if ChatGPT gives great advice, even if ChatGPT gives way better advice than any human therapist, something about collectively deciding we’re going to live our lives the way AI tells us feels bad and dangerous”.

Experts have also given their opinions. Some people say that Altman’s worries show that people are really worried about where AI is going, especially as it gets closer to AGI. But some people say that OpenAI’s business interests, like the pressure from Microsoft to turn into a for-profit company with a $13.5 billion stake, may affect these public statements. This duality makes it harder to understand what Altman really wants.

Broader Implications

ChatGPT-5’s expected features come at a time when AI is a big part of everyday life. AI is changing industries and the way we work and interact by powering virtual assistants and automating customer service. ChatGPT-5 could speed up this trend by automating difficult tasks and making people more productive in fields like healthcare, education, and research.

However, these advancements also amplify risks. The potential for AI to influence decision-making, from personal choices to public policy, raises concerns about over-reliance and loss of human agency.

Altman himself has cautioned against young people using ChatGPT as a life advisor, noting, “Something about collectively deciding we’re going to live our lives the way AI tells us feels bad and dangerous”.

Altman also talked about the risk of AI-driven fraud in another interview, which shows how important it is to have strong security measures.

The chart above shows how the complexity of AI models is growing at an incredibly rapid pace. For example, ChatGPT-5 is expected to have about 1.5 trillion parameters, which is a big jump from GPT-4’s 1 trillion and GPT-3’s 175 billion. This growth shows that AI is getting more powerful, but it also shows that it’s getting harder to handle it in a responsible way.

Sam Altman’s strong warning about ChatGPT-5 is a wake-up call for both the AI industry and society as a whole. There are big risks and big benefits to AI as we enter a new era. These include better reasoning, the ability to work with multiple modes, and progress toward AGI.

Altman’s comparison to the Manhattan Project and his call for “adults in the room” show how important it is to have ethical guidelines, government oversight, and public debate to make sure that AI works in the best interests of people.

As ChatGPT-5 prepares to launch, the tech community, policymakers, and users must grapple with the question Altman posed: “What have we done?” The answer lies in our ability to balance innovation with responsibility, ensuring that AI’s transformative power is harnessed for good while mitigating its potential harms.

Also Read This: How to Make Money Using AI in 2025: 25 Best ideas

Also Read This: Zuckerberg Just Declared War in the AI Arms Race: Meta Is Building Superintelligence